Nate Silver – Signal vs Noise. Book review

Overconfidence regarding our prediction skills often leads to worse predictions in situations where there is a high level of uncertainty surrounding an event. In the book by Nate Silver “The signal and the noise – why so many predictions fail but some don’t”, uncertainty is represented by the noise-iness of the data, which masks the true underlying signal of a given event.

Overconfidence often results in mistaking the noise for the signal, over-fitting or over-specifying a deterministic solution to a more general, dynamic problem leading to wrong predictions.

This problem is compounded by the fear of failed predictions or the hesitancy in expressing results with an acknowledgement of the inherent uncertainty in situations (as given by presenting results in a probabilistic manner or as odds).

It is further exacerbated by not having the incentive to make honest, consistent and accurate results, or rather having incentives to make biased, less honest, less reliable forecasts. Biased forecasts damage the credibility of the system as a whole and stem the progress that may be made in improving forecasting ability.

Silver discusses a wide range of arenas where predictions and forecasting have been used highlighting the few success stories among the plethora of failures. These include areas as diverse as baseball, presidential elections, weather, earthquakes, chess, poker, financial markets, infectious disease outbreaks, climate, terrorist attacks, etc.

The internet has exponentially increased the amount of data available to us, but it has also increased the likelihood of greater errors in forecasting due to degree of noise present in the data. Forecasters often tend to mistake correlations in the data for causation. The predictions should be data driven but should tell a story that is rooted in reality or fundamentals or process. The problem or situation being forecasted should not be viewed merely as a set of variables and equations, or data for data’s sake alone.

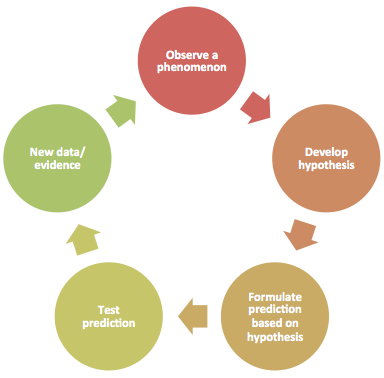

Silver advocates a probabilistic Bayesian approach to thinking and decision making. He states that a probabilistic methodology is an essential part of a scientific forecasting. It encourages us to place bets on our understanding of events, our hypothesis. The Bayesian approach recognizes that we all have prior beliefs regarding certain events and that these prior beliefs often color our interpretation of the data that we see. However, as new evidence is obtained the approach allows us to continually revise and improve our interpretations and views and to re-test our hypotheses. We weigh the strength of the new evidence against the overall strength of the hypothesis or theory and revise or change (or not change) our beliefs accordingly. Sometimes new evidence may suggest that the original forecast is incorrect. It is important to correct such a forecast than to persist in sticking to it.

A frequent feedback loop helps keep the model well calibrated to the data. Overtime as more data becomes available divergent beliefs would converge toward one another.

Figure 1: Bayesian Approach

Silver stresses the importance of using simulation models over statistical ones. The latter may be used as a base line case but the former helps us to consider and imagine a wider range of events.

The uncertainty in probabilistic models should prompt action rather than hinder it. Forecasters have a tendency to mistake unfamiliar situations as improbable ones thus making them unprepared for and vulnerable to such situations when they occur. Probabilistic approaches, especially in situations with a higher degree of uncertainty, encourage us to think of possible events that we are least likely to consider. By accepting that we don’t know everything and not fearing our failures we improve the chances of getting some of our predictions right.

Other issues that were highlighted in the book include:

- Understanding the dangers of extrapolation where the current trend is assumed to continue into the future. The impact is greater where the underlying growth is exponential.

- Keeping track of how our predictions have turned out (successes and failures) helps us to better understand the way we distort and interpret the signals that we receive in data.

- Never ignore data especially in situations where the events being studied are rare.

- Significance testing, large R-squared, may be indicative of a better fit of a given model to data but does that fit represent fit to noise or signal? Silver in particular is skeptical about frequentist tests of statistical significance.

- In complex models involving huge computing power, bugs in the code can undermine the hard work of the most competent forecasters. It is important to question and review the model when faced with unexpected or hard to explain results and to improve processes through trial and error.

- For predictions the focus should be on self improvement or greater awareness of one’s limitations. However when evaluating the level of skill in predictions we tend to focus on results with an emphasis on good results as compared to bad results. In doing this we attribute greater skill to those obtaining good results overlooking possibilities that luck or the underlying work of others could have contributed to those results. Our focus must instead be process oriented, i.e. what was the process followed in the underlying model that led to those results?

- Aggregating or averaging of individual forecasts often leads to better predictions that the individual forecasts. This does not necessarily imply good forecasts as it depends on the quality of the individual forecasts- sometimes it may be beneficial to ignore some or most of the individual forecasts and go with what you consider to be the best in order to avoid watering down the overall result. Also averaging of individual forecasts should not be confused for consensus among forecasters. In the latter case a few people may influence the forecasts of others. For averaging or aggregation an important assumption is that individual forecasts have been obtained independently using different models and/or data.

- Forecasters often fail to understand the limitations presented by small sample sizes or sparse data.

- The very act of prediction may change the way people behave which in turn could influence the outcome or result or accuracy of the prediction.

- Sometimes herding behavior among people overconfident about their predictions and models may influence or upset the way an otherwise rational system would work.

- Forecasting has worked better for situations like weather forecasting because of observable laws of physics where the causal relations are known. In such situations it is important to obtain as much knowledge of the structure behind the system. For other situations where the laws are less observable and unknown (like financial markets and earthquakes) predictions have a poorer track record because of the complexity in the system.